You’re running a website or web application, and it’s gaining popularity quickly. The problem: you’re running your website on a single, cheap VPS. It uses typical components, like MySQL or MongoDB, a web server like Apache or Nginx, some storage, and a web framework like Python Django or a single page app that uses web services. And now you need to scale your web application because things are falling apart rapidly. What to do?

You’re running your website on a cheap VPS and things are falling apart. What to do?

This article discusses guerrilla-type options to scale your website and serve more users without spending millions, starting with outrageously simple and cheap tactics. From there, we’ll gradually continue to the more advanced and more expensive solutions.

Table of Contents

Scale your web application Using a CDN

CDNs, or Content Delivery Networks, are awesome and should be your first guerrilla move to scaling up. You probably have an idea of what a CDN is, but let’s still look at it in more detail.

How a CDN works

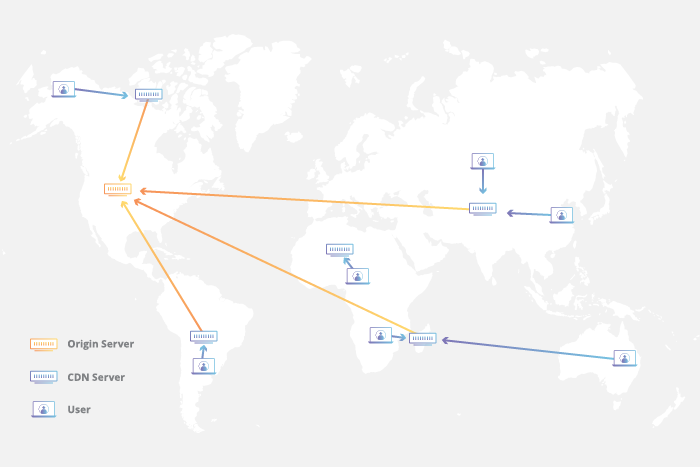

A CDN provider operates a large number of computers, which are geographically spread across the world. These are often called edge nodes. Usually, they have servers at all the strategically best places, meaning close to Internet backbones. These servers work together to deliver Internet content to users worldwide.

A CDN does so transparently through DNS, so you need to let the CDN manage DNS for your website. Now, if a user requests a page on your website, the CDN determines that user’s location and points him to the IP address of a geographically nearby server. This nearby CDN server identifies which page is requested for which domain address, and if this page is cached, it delivers a cached version to the user.

This is a simplified but still accurate description of how things work. Of course, in reality, there’s a lot more going on behind the scenes.

CDN advantages

There are a couple of obvious advantages to this approach:

- It reduces the load on the origin server (your cheap VPS) because the CDN will serve most assets directly from the cache and never hit the origin server

- The bandwidth reduction on your original server is significant

- You’ll have faster load times because the cached pages and assets are nearby, thus load a lot quicker for everyone, no matter their location. As a bonus, CDNs apply state-of-the-art transfer algorithms, compression, and (optional) file minification.

- A faster site these days means better ranking in Google, and obviously a better user experience.

And then there are added benefits as well that are less obvious:

- CDNs are very good at detecting DDoS attacks and will protect your site against them.

- A good CDN acts as an application firewall, blocking suspicious requests or requiring a captcha to reduce the load from unknown and suspicious robots.

- A CDN can offer you offline protection: when your site goes down or is way too slow, it will still show the user a cached copy of the site.

- Finally, the CDN acts as a proxy to your site, so users will never know where your site is actually hosted if configured well. In fact, you can disallow any IP other than those of the CDN to access your web server, reducing the attack vector to the original server considerably.

When is a CDN the right choice?

The key to profiting from a CDN is caching. If each website page is built uniquely for each visitor, it’s generally not cacheable. Or, at least, the so-called cache hit rate will be very low. Hence, a mostly static website is an ideal candidate.

However, many sites have pages with, for the most part, static content, while only portions of it are dynamic. With such a site, you can still profit from a CDN by serving the cacheable parts separately and loading the dynamic parts asynchronously with JavaScript. A good example of such a setup is often seen when a site allows comments. The main article, video, or image is part of the HTML page and can be cached easily, but the comments are only loaded through JavaScript when a user scrolls all the way to the end. It’s good for your server and the user since he needs to pull in less data in advance.

I personally only have experience with Cloudflare. The great thing about Cloudflare is they offer a free tier that is unlimited in terms of bandwidth and the number of pages your site has. It fits well with our target of guerilla scaling a website. You start to pay if you use more advanced options, like specific firewall rules or analytics.

If you’re running WordPress (like 40% of the websites do), they also have a plugin aimed at effectively caching WordPress sites for $5 per month, which is what I use to host my Python tutorial. I’m able to cache roughly 70% of all the web requests this way. Although unneeded, I can still do with a cheap VPS, the improved load speed is noticeable to Google and site measuring tools like Lighthouse.

To be clear: I have no affiliation with Cloudflare and I’m not getting paid. They’re just awesome.

Scaling up your web application

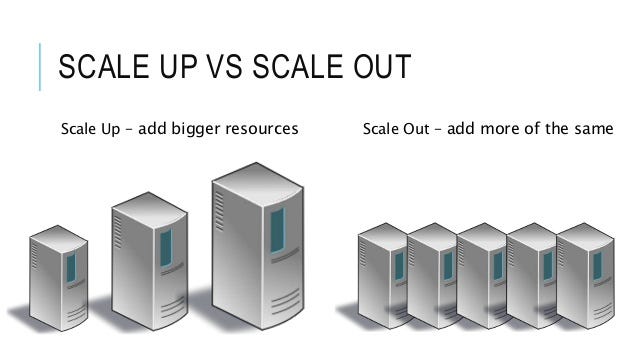

A CDN can alleviate the load on your origin server; there’s no doubt about it. But at some point, the origin server will still reach its limits. Luckily, we’ve got another straightforward trick up our sleeves: scaling up.

By scaling up, I mean throwing more hardware like CPU power and memory at your application. Most web applications run either on a VPS or on physical hardware. If you’re running on a shared hosting environment, upgrading to a dedicated VPS or server would be a good first step.

If you’re already using a VPS or dedicated hardware, you can look at upgrading it. The most important aspects of any server are:

- CPU power: how fast are the CPUs, and, more importantly, how many cores are there?

- Memory: how much working memory (RAM) is available?

- Disk storage: typically you want fast SSD drives

So to scale up, you can look for faster SSD-type disks, more memory, and more CPUs. In addition, you can look at spreading the load without adding more servers yet. E.g., you may want to serve static files (images, HTML, CSS) and your database files from separate disks to improve throughput. You might also want to write your logs to a separate disk.

If you want to do this well, you need to profile your server usage to find the bottlenecks first. Is it memory, disk speed, CPU speed, or a combination of these that’s bogging stuff down? Alternatively, if in a hurry, you can just scale it all up and hope for the best.

Spreading services over more hardware

Again, this is an easy one. Instead of hosting your web server, database, cache, mail, search engine, and other services on one machine, you can spread this out over multiple machines.

Most VPS hosts offer virtual networking, allowing you to create a dedicated backend network that connects multiple VPSes using private IP addresses. You can get a long way by separating your database into one or more machines and hosting your website logic on another machine.

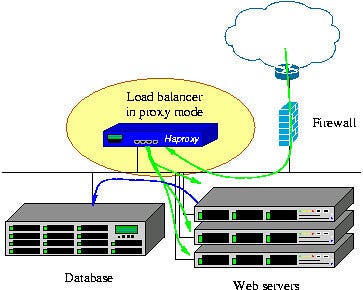

An additional trick you can use in this scenario is to look for a load balancer. Most of the time, VPS providers offer this out of the box, and it’s not difficult to set up. The load balancer spreads the load evenly across multiple servers, allowing you to serve more visitors. These servers can be web servers but can also be replica servers of your database, for example.

If you use dedicated hardware or want more control, you might want to look into HAProxy. It is the de-facto standard open-source load-balancer that can balance both HTTP and TCP connections.

So far, we have discussed relatively simple ways to scale your web application that can give you some air. However, they won’t make a world of difference when your traffic is growing by a factor of two each week. There’s a limit to how far you can scale up, but if you can do it without too much effort, it‘s a cheap way to buy yourself time. Meanwhile, you can work on a plan to start scaling out.

Scaling out your web application

When all the other options are depleted, you’ve reached the ultimate way to scale, called horizontal scaling, or scaling out. Instead of beefing up individual servers, we start adding more of the same servers that do roughly the same thing. So instead of using a couple of huge servers, we use tens, or even hundreds, of regular servers. There is, theoretically, no limit to how far you can scale out. The big tech companies like Google, Facebook, and Amazon are living proof of how well this can work. These companies offer services to the entire world, apparently seamlessly scaling them up and down with the amount of load they get.

The big trick is spreading the load and data across these servers. It requires distributed algorithms to allow a database to scale horizontally. The same is true for the parallel processing of lots of data. The chances are slim that you’ll be able to implement this yourself unless you have a Master’s degree in Distributed Computing. And even if you do, it will be hard, if not impossible, to pull off by yourself or a small team. It requires lots of knowledge and lots of people.

Luckily, we live in the age of cloud computing, which allows us to stand on the shoulders of giants. It has become relatively easy to build horizontally scalable systems using the services offered by Microsoft, Google, and Amazon. The problem: it’s not exactly cheap! So, to stay true to the article title, I will offer some more suggestions to consider before fully resorting to cloud providers.

Replication and clustering

The first thing to become a bottleneck is usually your database. Luckily, all the major database systems allow for some sort of clustering and/or replication. Using these options, you can spread the database load and storage over multiple machines. In combination with a load balancer, this can get you a long way.

The downside is that such setups require serious effort and sysadmin skills to maintain. The upside is that you can do things like performing rolling upgrades, allowing you to service the cluster without downtime. It’s doable, but you need to have solid Linux and networking skills and a lot of time to learn and execute this well, or the money to hire people that can do it for you.

NoSQL

You may also want to consider switching from SQL-type databases to a NoSQL database. The latter often has much better and easier scalability. I’m personally very fond of Elasticsearch, which seamlessly scales from a small, cheap, single-node system to a system with a hundred nodes. It can be used as a document store and search engine, and you can even store small files in it. The official clients, available for several popular programming languages, spread the load over the entire cluster, so you won’t need a load balancer.

If you want to learn more about Elasticsearch, make sure to check out my articles about it:

Using a hybrid model

As a time-saving alternative, you could consider a hybrid model. Once your database is starting to become the bottleneck, you can look into the many database-as-a-service offerings. These are expensive, but they scale well and don’t require any maintenance from your side. It could very well be the best option. Alternatively, you may want to take the plunge and switch to a completely cloud-native solution instead.

Cloud-native development

With cloud-native development, I mean building your application from the ground up with the cloud in mind. Cloud-based web applications tend to scale very well. When building for the cloud, you fully exploit the scalability, flexibility, and resilience of cloud computing.

Containers and microservices

Most applications in the cloud are packaged using containers, so when developing for the cloud, you’ll quickly find out that you’ll need to work with containers.

In essence, a container contains both the application and all its requirements, like system packages and software dependencies. Such containers can run anywhere, from your own PC to a private cloud, to large cloud providers like Amazon, Azure, Google, Digital Ocean, etcetera.

To make this more tangible, let’s take a look at how a Python Django application would be packaged in a container. The container for such an application would contain:

- A minimal set of Linux system files

- A Python 3 installation

- Django and other required packages

- The code itself

Similarly, another container or set of containers would run the database. And perhaps you’ll have a whole battery of containers running microservices, all offering a specific functionality.

The separation into microservices is seen in a lot of big companies. The advantage is that small teams can each work on individual services, creating natural boundaries between teams and code bases. I would only recommend a microservice approach to big companies, with multiple teams. If you’re a small, one-team, or even one-person company, it’s actually a sane choice to create so-called monoliths. They are easier to manage and easier to reason about. You can always split off functionality at a later stage.

Docker and Kubernetes

The most prominent names in the world of containers are Docker and Kubernetes. Although the usage of these systems will be a learning curve, it is worth the trouble since all the major providers support them, especially Kubernetes.

I’ve written before about containers and how to create a containerized Python REST API.

Leveraging cloud services

A cloud-native application typically uses cloud-based services as well, like file storage and a cloud-based database. One well-known file storage system is Amazon’s S3, also called a cloud object storage system. A popular NoSQL database at amazon is DynamoDB. Other cloud providers have similar products to offer. These products are extremely scalable and convenient to use. They allow for truly rapid development, but they will also rob your wallet of money very rapidly. However, there are many use cases where the costs are not a big issue. E.g., when your income grows linearly with the number of visitors, you probably favor growth and reduced complexity over reducing cost.

Advantages of the cloud

The separation into containers, and the separation of functionality into microservices, is typical for cloud solutions. As is the usage of cloud-based object storage and databases. The advantage being, that all parts of the application can scale up and down as needed. Each container can run as a single copy, but you can just as easily add more replicas and balance the load over all the copies.

Another advantage of microservices is that they can easily be swapped with a new version, changing only part of the complete system, and in turn creating less risk. Yet another advantage is that teams can each work on their own service without getting in each other way, creating natural boundaries between teams. E.g., one team might prefer a Java stack, while another team prefers Python. As long as they offer a well-defined REST interface, it’s all good!

Keep learning

You should now have a general idea of how to scale up a web application. We started with cheap, easy hacks and ended with full-blown cloud-native development. To learn more, and see what the big tech companies have to offer, here are some links to get you started (in no particular order):

In addition, Red Hat has a page explaining cloud-native development. Finally, there’s always Wikipedia. Start with the article on Cloud-native computing.